Image: Shutterstock

On February 24, as Russian forces began their invasion, stories of US-funded biolabs and bioweapon research in Ukraine began to spread on social media.

The false claims spread from right-wing circles but became more widespread, and were soon picked up by Fox News host Tucker Carlson. It wasn’t long until the Russian government, which had spread tales of Ukrainian biolabs in the past, adopted the narrative as a belated justification for the invasion.

We studied how the biolabs narrative was amplified on Twitter, and made an unsettling (if not entirely surprising) discovery. Most of those responsible for sending the story viral were trying to debunk it, but only ended up giving it more oxygen.

Debunking gone wrong

We initially set out to look for coordinated groups of conspiracy theorists promoting the bioweapons theory.

To do this, we searched for Twitter accounts that retweeted posts mentioning both Ukraine and biolabs. Then, to see how these accounts were connected to each other, we looked at whether any two accounts retweeted the same thing at the same time. We found 1,469 such accounts, and 26,850 links between them.

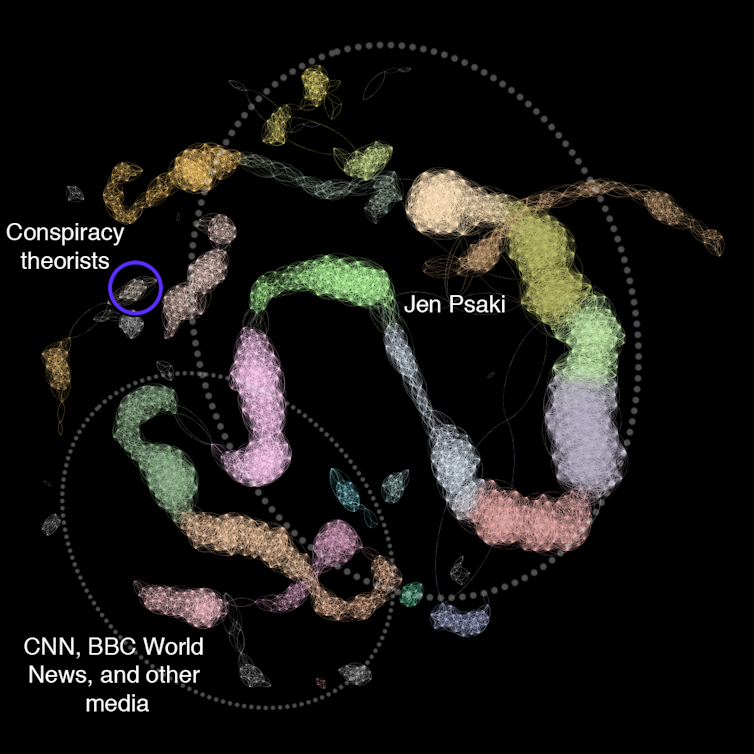

In the visualisation of our results below, each dot is an account that retweeted at least one post about Ukraine biolabs. When two accounts retweeted the same thing within a minute of each other on more than one occasion, we draw a line between them.

You can see the accounts are split up into clusters of coordinated retweeting behaviour. We found 50 such clusters, and 49 of them were trying to debunk the bioweapon theory. Only one small group (circled in blue) was trying to spread it.

Within other large clusters in this network we saw tweets from accounts working to debunk the bioweapon conspiracy, such as White House press secretary Jen Psaki, the Pentagon, the Kyiv Independent, and Sky News.

Our analysis concludes that those most prominent in spreading the narrative were those trying to debunk it. Most of the clusters were retweeting Psaki (right-hand dotted circle).

Disinformation for everyone

One place to start understanding what’s going on is with the American scholar Kate Starbird’s idea of “participatory disinformation”.

This process often starts with highly visible users (like politicians, celebrities, or opinion leaders) disseminating news to their online audiences.

However, for the biolabs conspiracy theory, the narrative began on alt-tech platform Gab and gained traction on Twitter due to the efforts of a fringe QAnon account. But as discussion was building on Twitter the theory was picked up by Chinese and Russian foreign affairs ministries, culminating in a segment on the Fox News program Tucker Carlson Tonight.

This is how a conspiracy theory becomes “news”. The audiences filter the news through their own world views, which are already influenced by the media they regularly interact with. The audiences build, change and promote these interpretations in their own social networks.

“Grassroots” participants pick up the disinformation going round in their communities, augment and disseminate it; the process recurs in a self-reinforcing feedback loop.

By the time political players such as Psaki or Russian government officials tweet about a conspiracy theory, it doesn’t matter whether they’re trying to dispel it or boost it: they only end up giving it oxygen.

We took note of Russia’s false claims about alleged U.S. biological weapons labs and chemical weapons development in Ukraine. We’ve also seen Chinese officials echo these conspiracy theories.

— Karine Jean-Pierre (@PressSec) March 9, 2022

If working to debunk false narratives only continues the feedback loop, what else can be done?

Participatory disinformation cycles have helped land us in a crisis about how we as societal groups make sense of the world.

American political scientists Russel Muirhead and Nancy L. Rosenblum call the result of this crisis “new conspiracism”.

Where old-fashioned conspiratorial thinking relied on complex theories to justify its claims, for new conspiracists an idea can be true simply because it’s getting a lot of attention.

The spread of new conspiracism has intensified with the erosion of trust in traditional institutions over recent decades.

Donald Trump and other politicians around the world have worked to accelerate this erosion, but they’re only part of the problem.

A bigger part is that misinformation is lucrative for social media companies, and social media is integral to how we socialise and form opinions.

What can be done?

Time and again we have witnessed conspiracy theories spread on social media, contributing to political polarisation and undermining democratic authority.

It’s time we rethink our media ecosystem and how we regulate it, before trust in democratic institutions and principles decline further.

Addressing this is a Herculean task and it’s not enough for countries to individually legislate and regulate platforms. It needs to be a global effort. Financial sanctions are no longer enough – there needs to be systemic change that disincentivises platforms profiting from mis- and disinformation.

Likewise, politicians and communicators such as Psaki need to be better informed that giving oxygen to these conspiracy theories can have unintended effects; attempts to raise awareness or debunk them can result in worldwide amplification.

For regular users of social media, the advice as always is to think twice before sharing or retweeting.

When a piece of content evokes a strong emotional response this can often be a sign false information is at play. If you really want to share something, taking a screenshot of the content is preferable to further amplification of the source as it cuts the disinformer out of the chain.

This article was written by:

Disclosure statement

Timothy Graham receives funding from the Australian Research Council for his Discovery Early Career Researcher Award (DE220101435), ‘Combatting Coordinated Inauthentic Behaviour on Social Media’. He also receives funding from the Australian Government Department of Defence.

Daniel Whelan-Shamy does not work for, consult, own shares in or receive funding from any company or organisation that would benefit from this article, and has disclosed no relevant affiliations beyond their academic appointment.